VMware NSX ALB (Avi Networks) and NSX-T Integration, Installation

Note: I created a common baseline for pre-requisites in this previous post. We'll be following VMware's Avi + NSX-T Design guide.

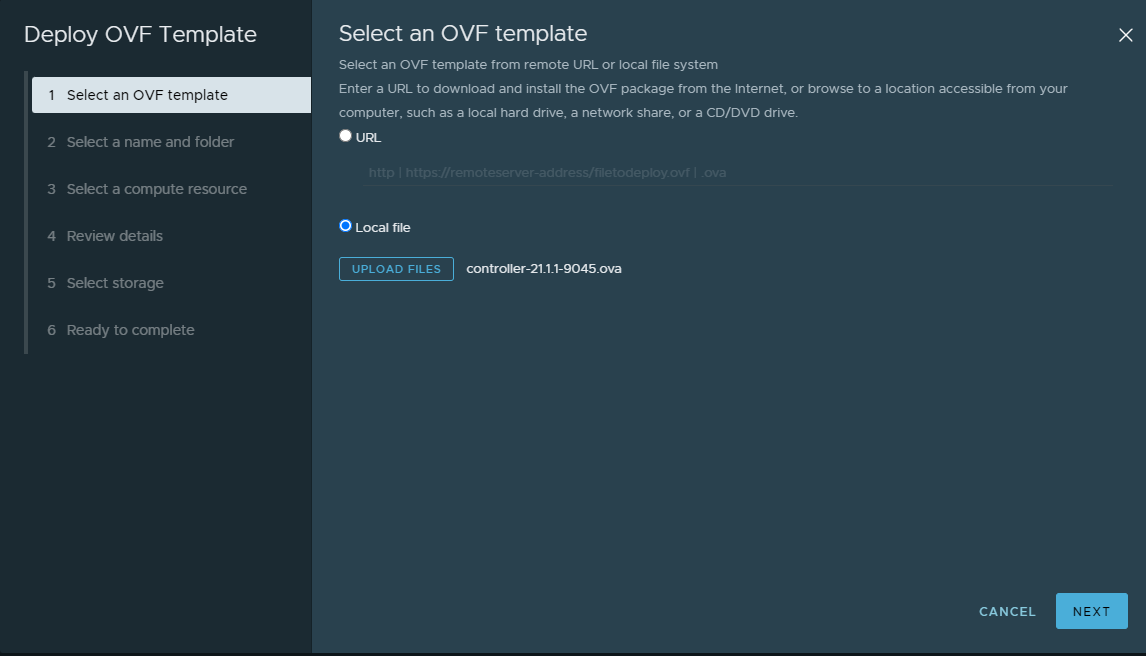

This will be a complete re-install. Avi Vantage appears to develop some tight coupling issues with using the same vCenter for both Layer 2 and NSX-T deployments - which is not an issue that most people will typically have. Let's start with the OVA deployment:

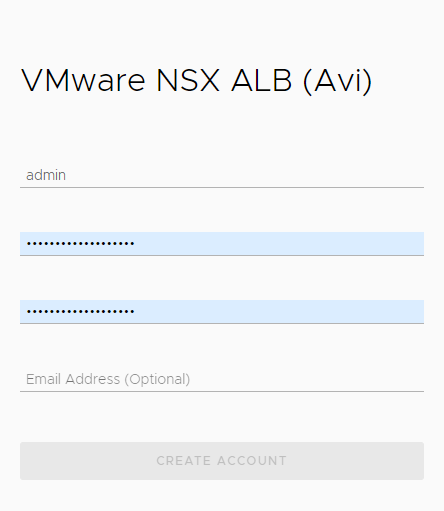

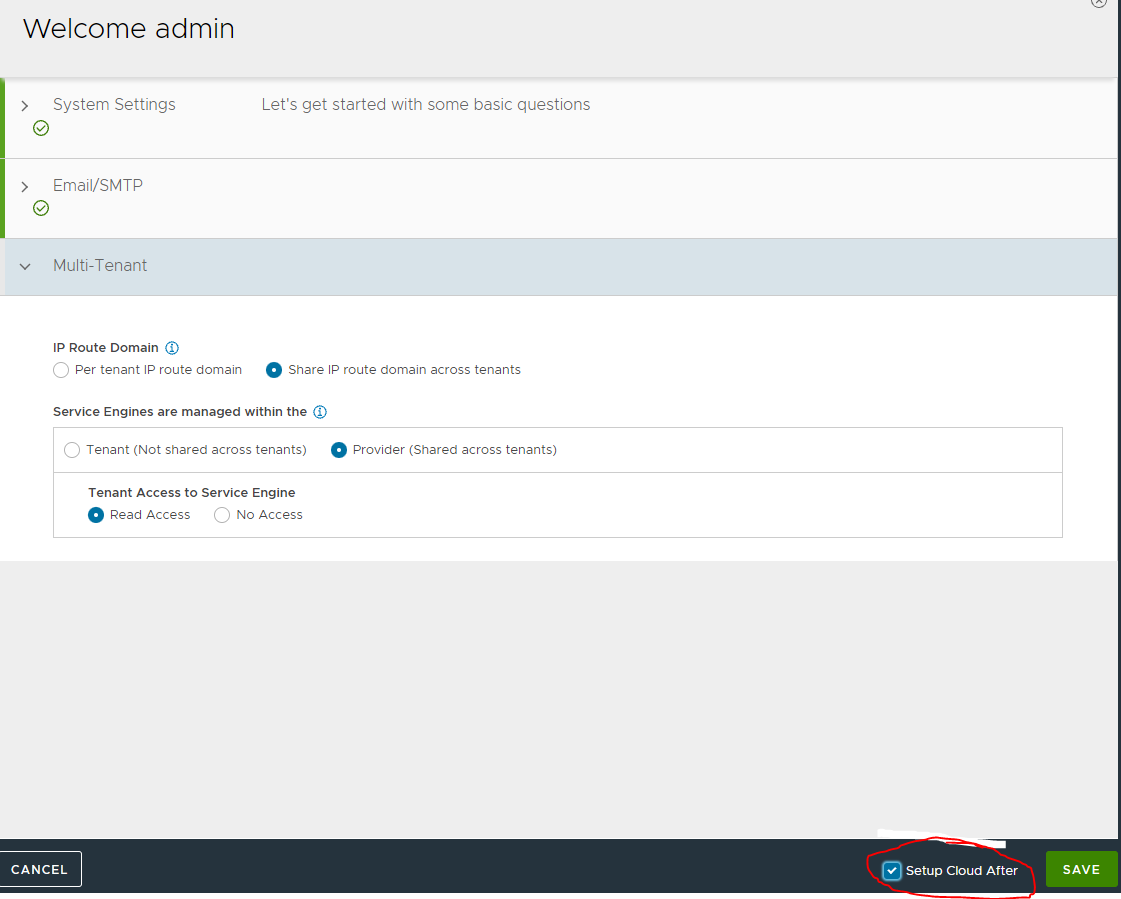

Initial setup here will be very different compared to a typical vCenter standalone or read-only deployment. The setup wizard should be very minimally followed:

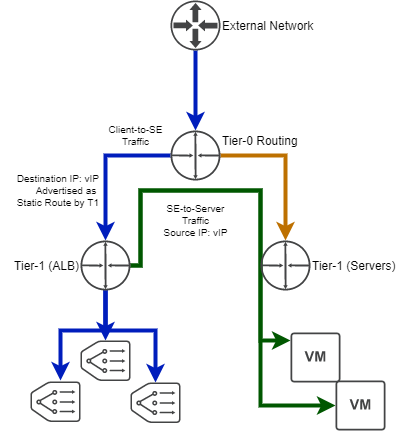

With a more "standard" deployment methodology, the Avi Service Engines will be running on their own Tier-1 router, and leveraging Source-NAT (misnomer, since it's a TCP proxy) for "one-arm load balancing":

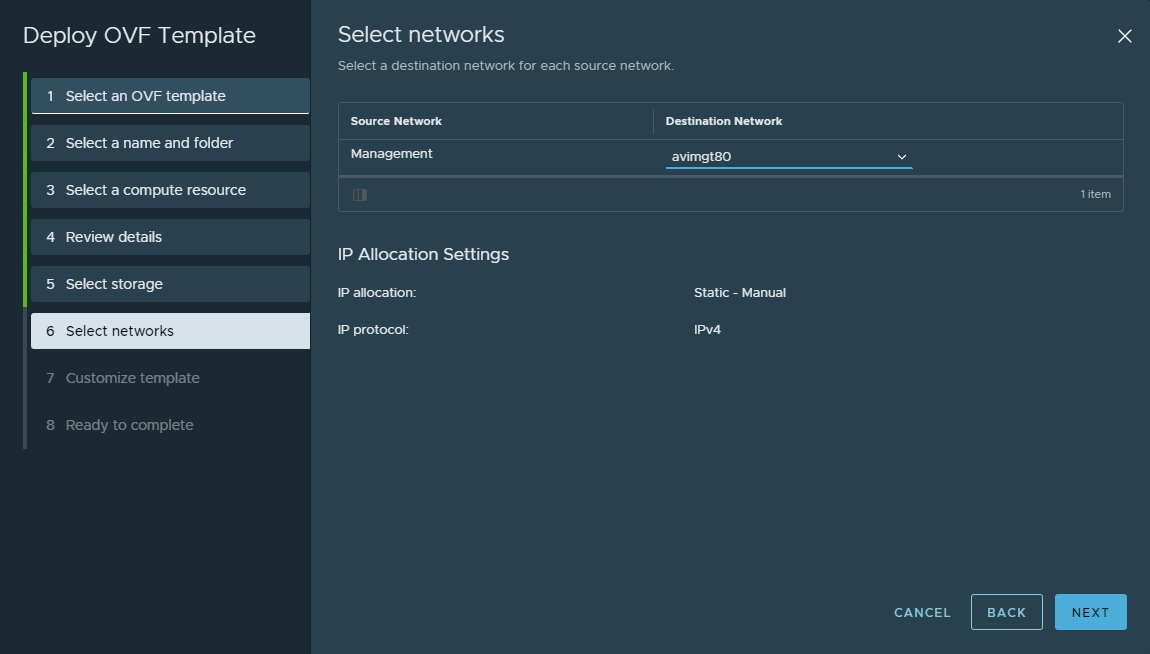

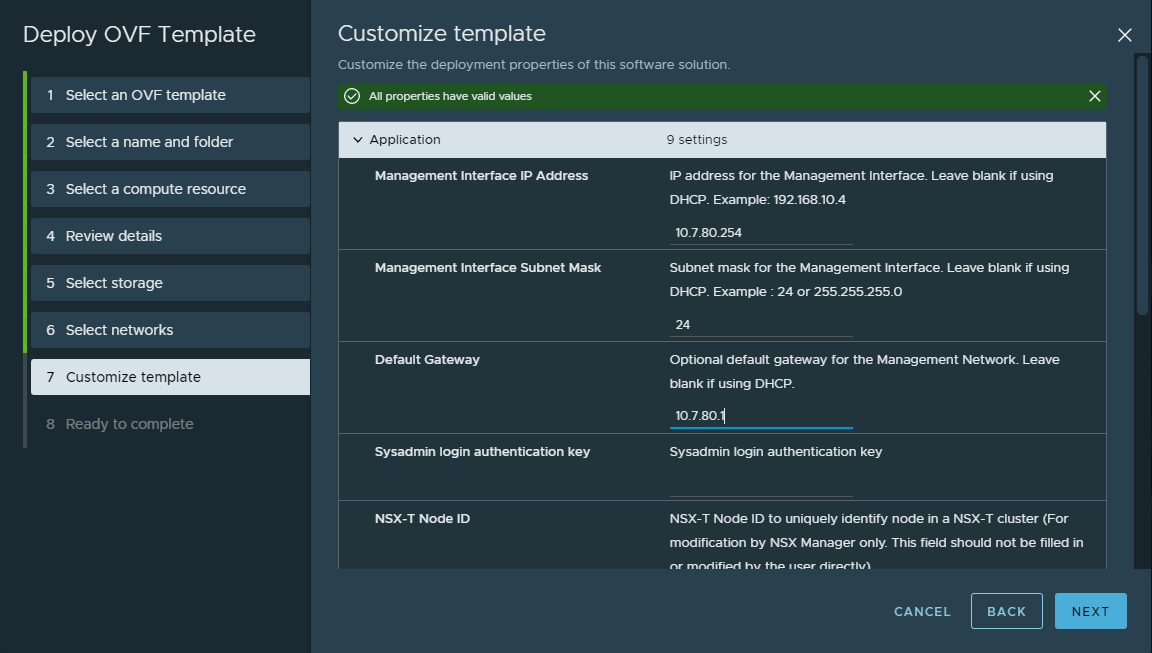

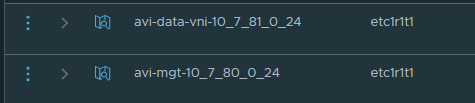

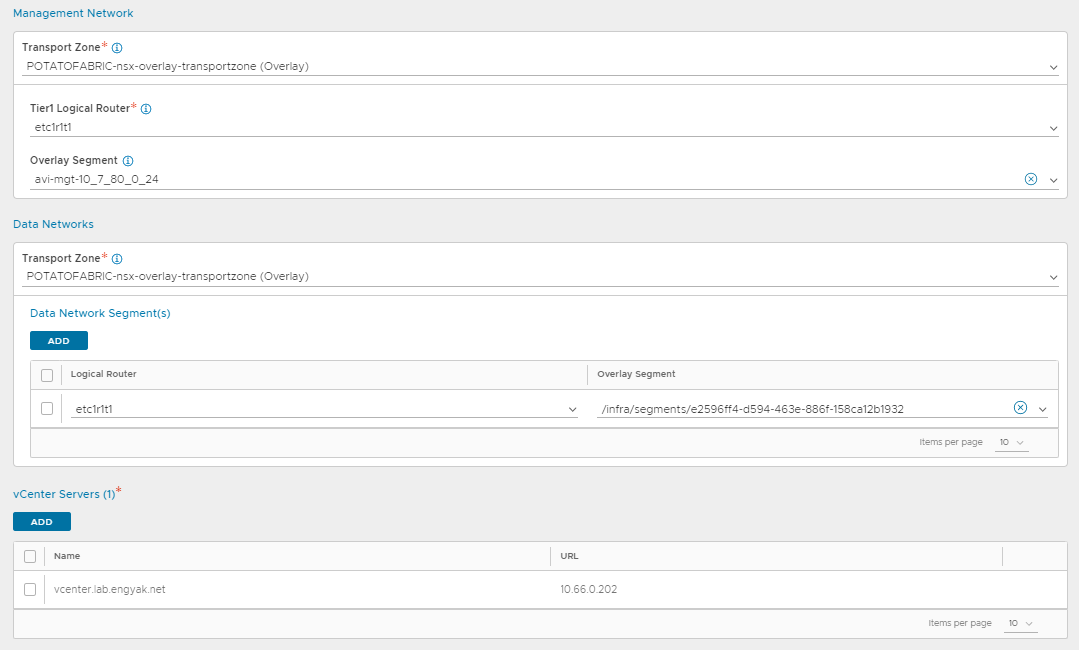

To perform this, we'll need to add two segments to the ALB Tier-1. one for management, and one for vIPs. I have created the following NSX-T segments, with 10.7.80.0/24 running DHCP and 10.7.81.0/24 for vIPs:

Note: I used underscores in this segment name, in my own testing both(_-) are illegal characters. Avi's NSX-T Cloud Connector will report "No Transport Nodes Found" if it cannot match the segment name due to these characters.

Note: If you configure an NSX-T cloud and discover this issue, you will need to delete and re-add the cloud after fixing the names!

Note: IPv6 is being used, but I will not share my globally routable prefixes.

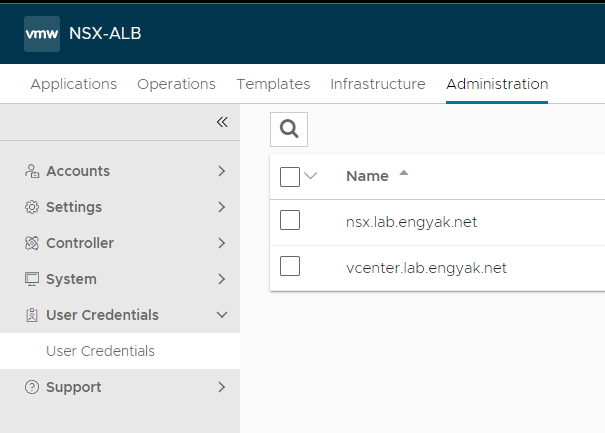

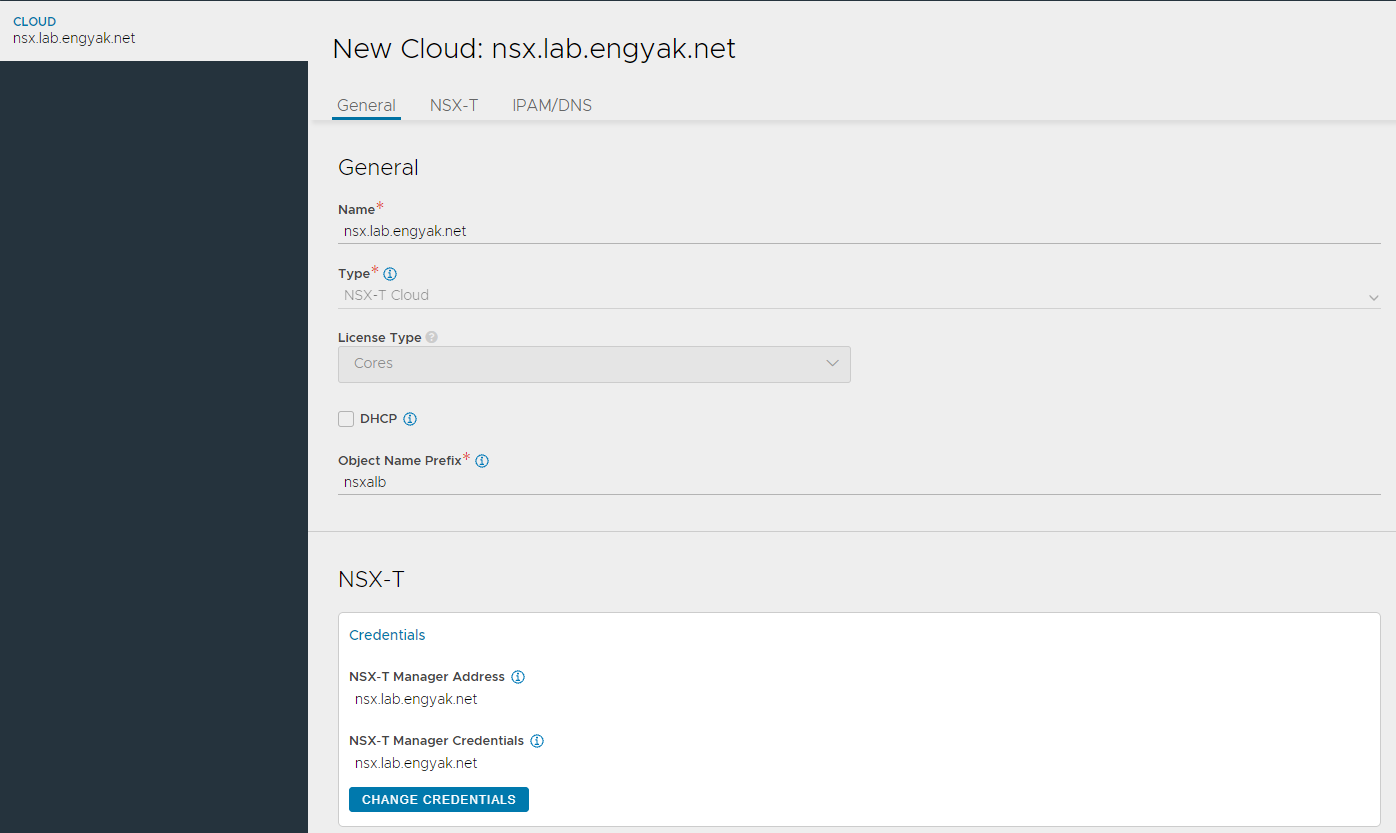

First off, let's create NSX-T Manager and vCenter Credentials:

There is one thing that needs to be created on vCenter as well - a content library. Just create a blank one and label it accordingly, then proceed with the following steps:

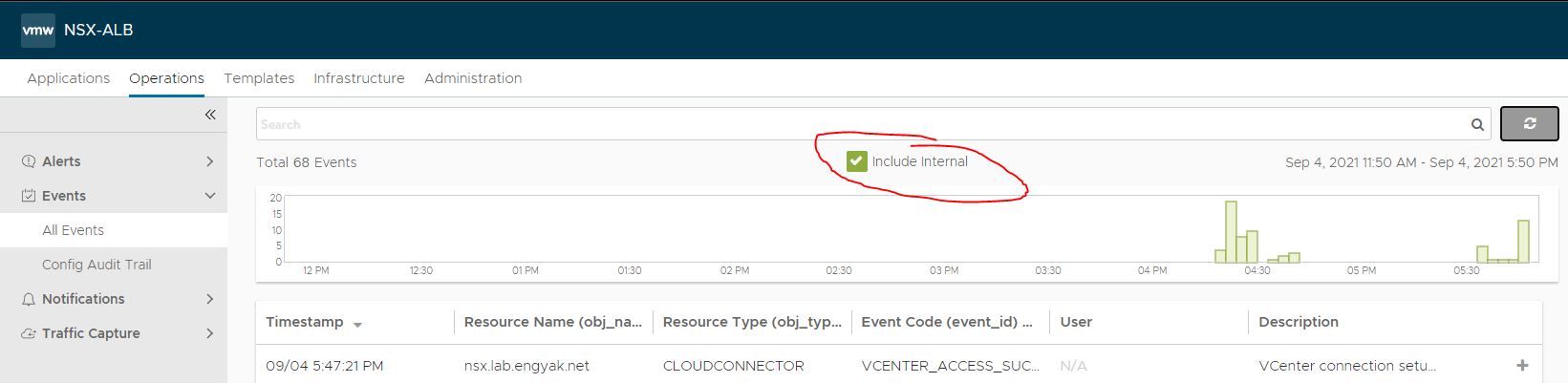

Click Save, and get ready to wait. The Avi controller has automated quite a few steps, and it will take a while to run. If you want, the way to track any issue in NSX ALB is to navigate to Operations -> Events -> Show Internal:

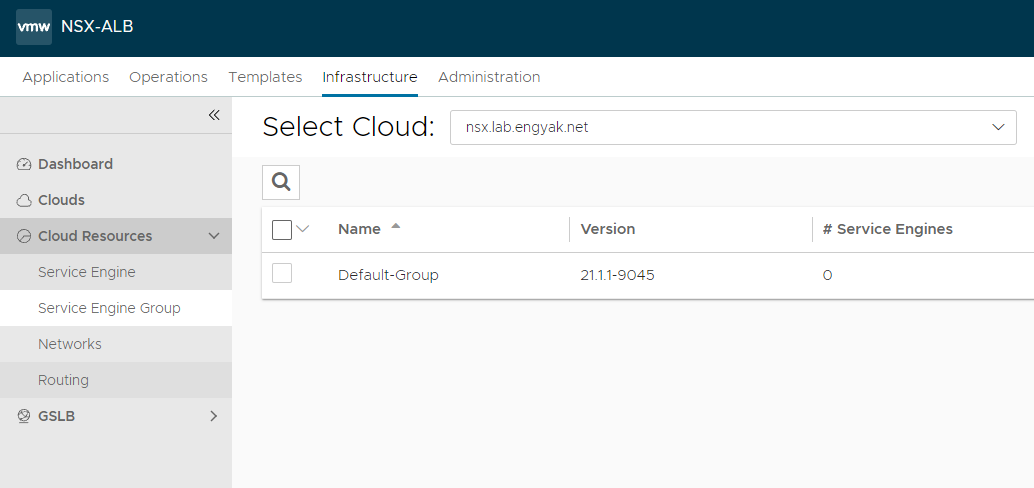

Once the NSX Cloud is reporting as "Complete" under Infrastructure -> Dashboard, we need to specify some additional data to ensure that the service engines will deploy. To do this, we navigate to Infrastructure -> Cloud Resources -> Service Engine Groups, and select the Cloud:

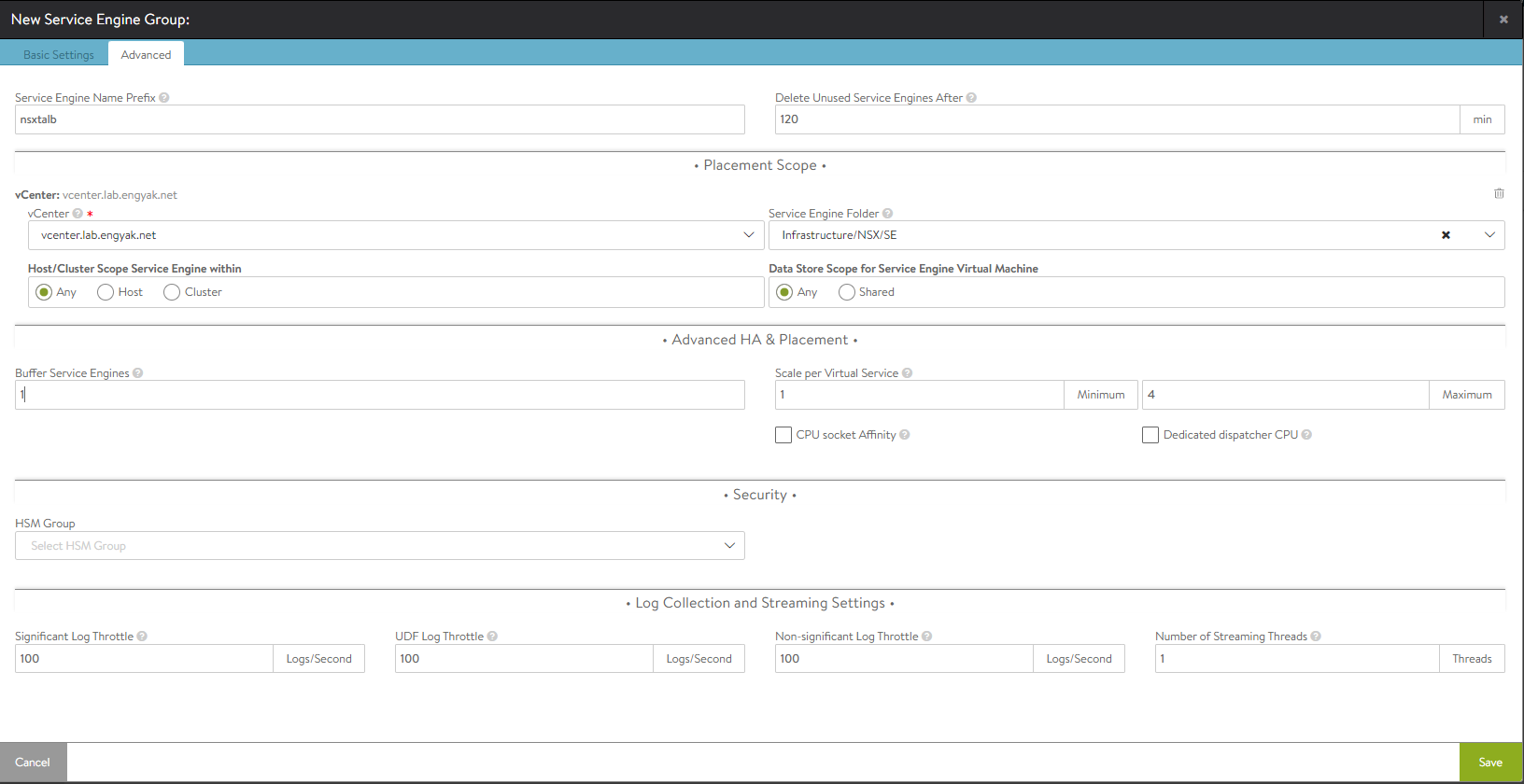

Then let's build a Service Engine Group. This will be the compute resource attached to our vIPs. Here I configured a naming convention and a compute target - and it can automatically drop SEs into a specific folder.

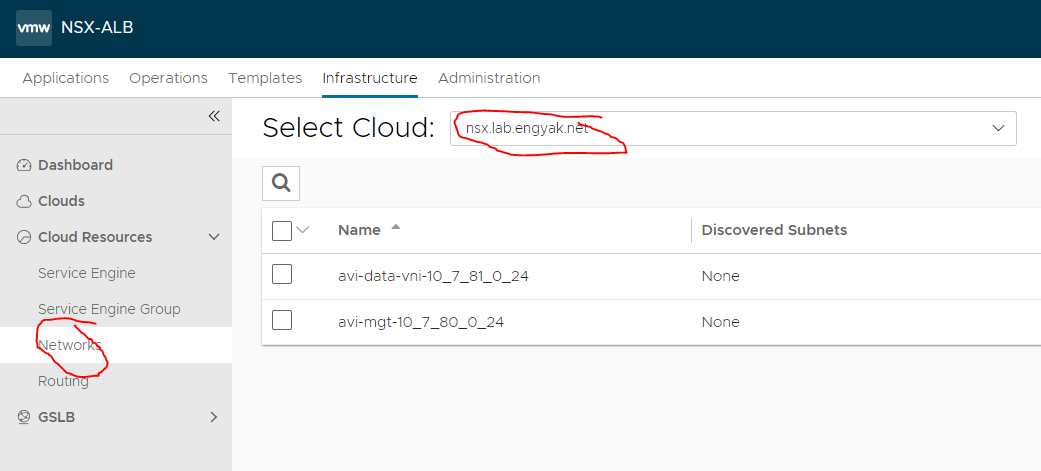

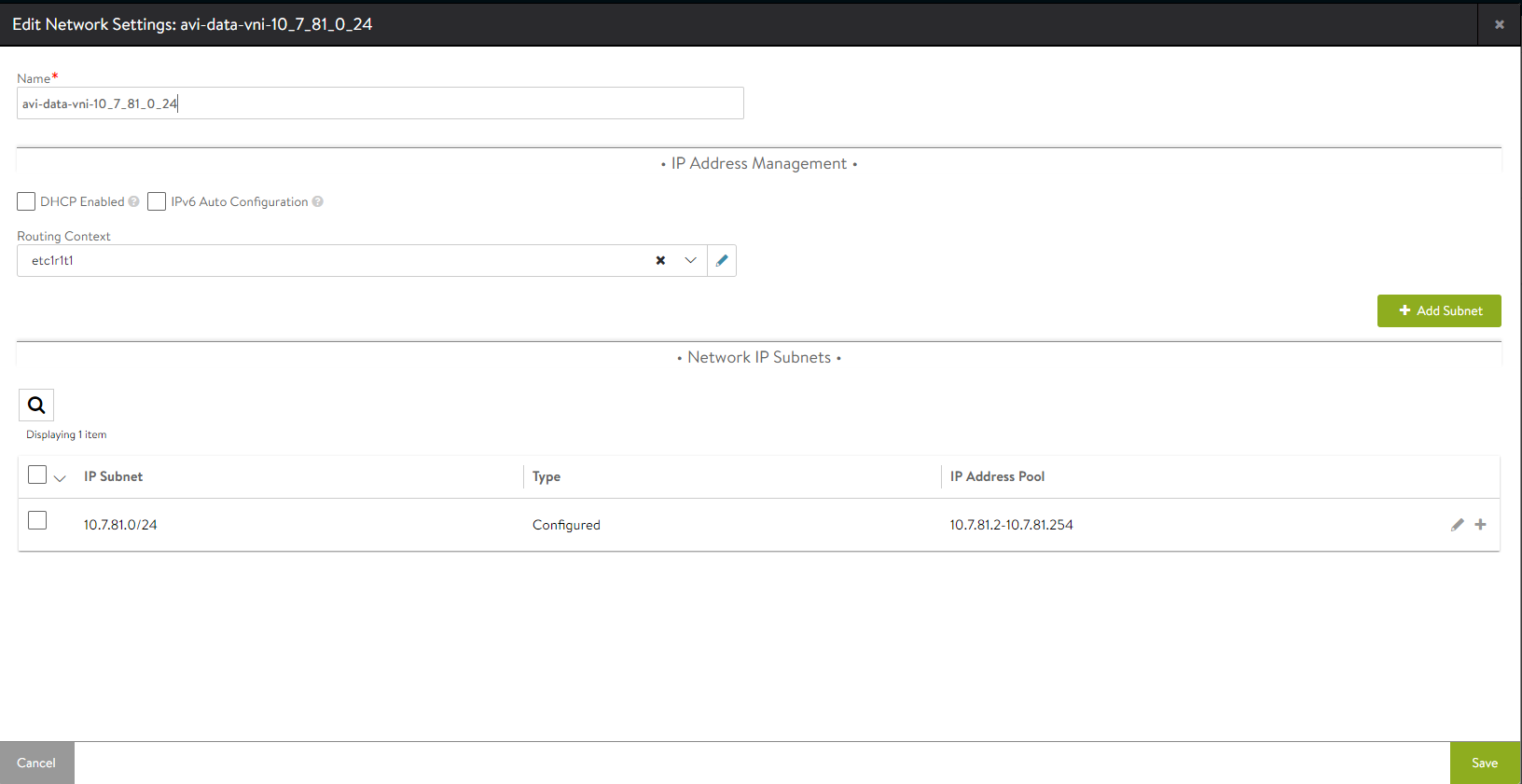

The next step here is to configure the built-in IPAM. Let's add an IP range under Infrastructure -> Cloud Resources -> Networks by editing the appropriate network ID. Note that you will need to select the NSX-T cloud to see the correct network:

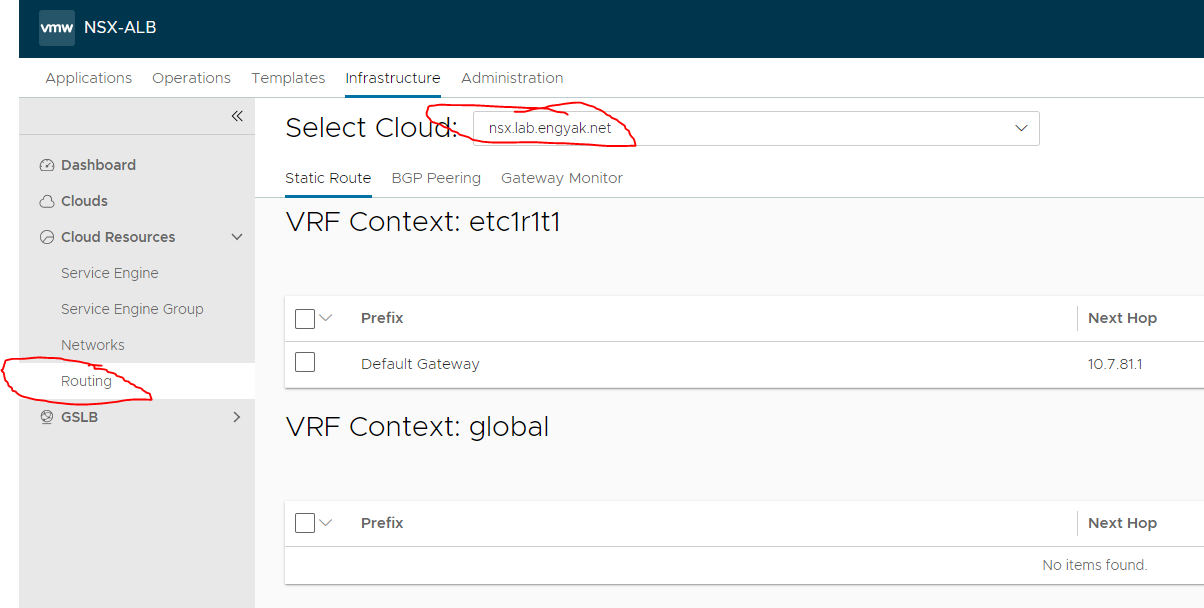

Those of you who have been LTM Admins will appreciate this. Avi SE also perform "Auto Last Hop," so you can reach a vIP without a default route, but monitors (health checks) will fail. The spot to configure the custom routes is under Infrastructure -> Cloud Resources -> Routing:

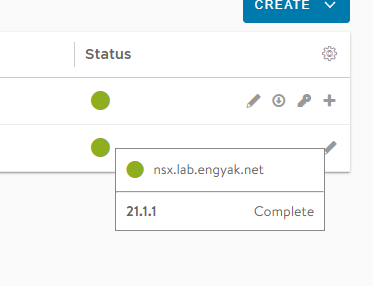

Finally, let's verify that the NSX-T Cloud is fully configured. An interesting thing I saw here is that Avi 21 shows an unconfigured or "In Progress" cloud as green now, so we'll have to mouse over the cloud status to check in on it.

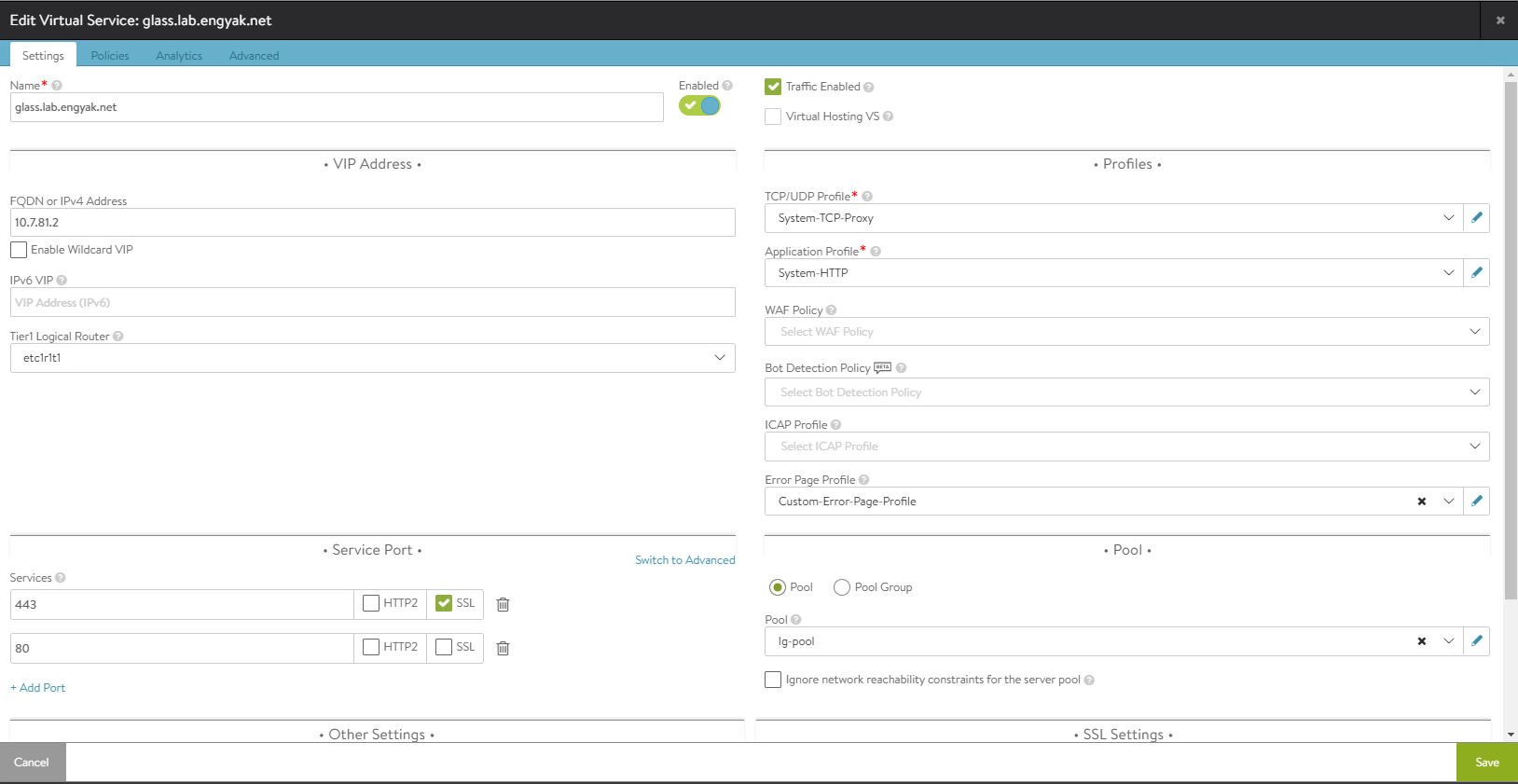

Now that everything is configured (at least in terms of infrastructure), Avi will not deploy Service Engines until there's something to do! So let's do that:

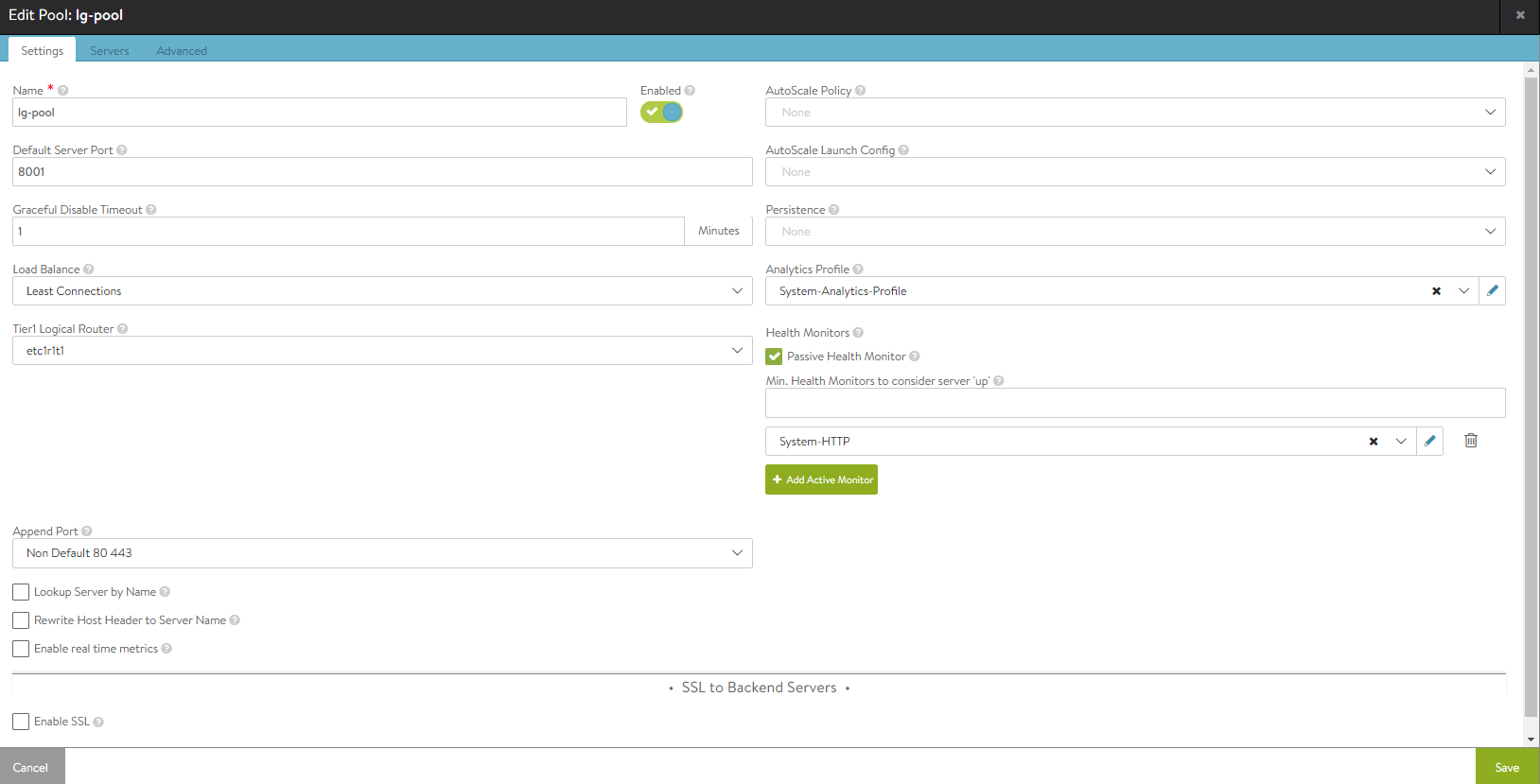

Let's define a pool (back-end server resources):

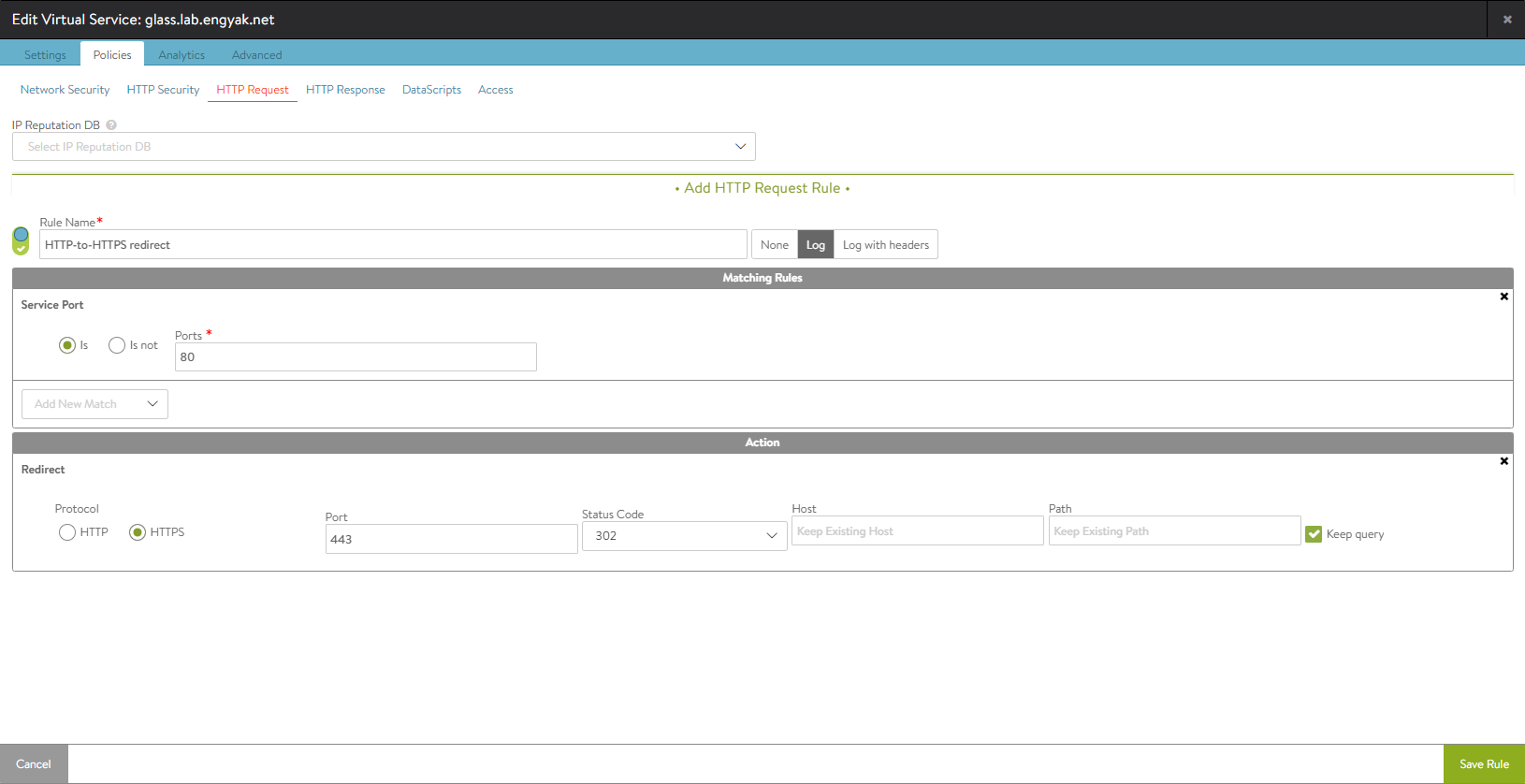

Let's set a HTTP-to-HTTPS redirect as well:

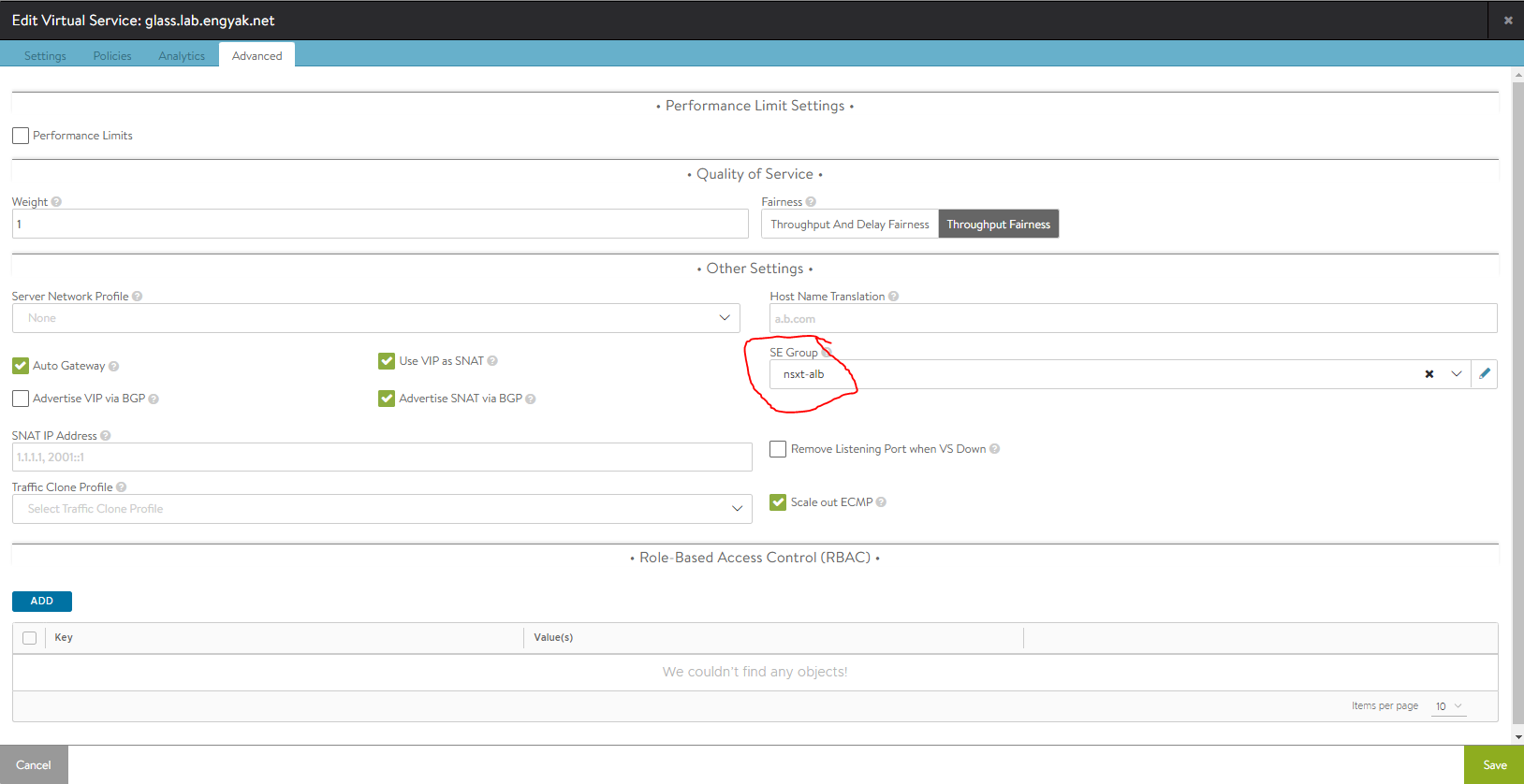

Finally, let's make sure that the correct SE group is selected:

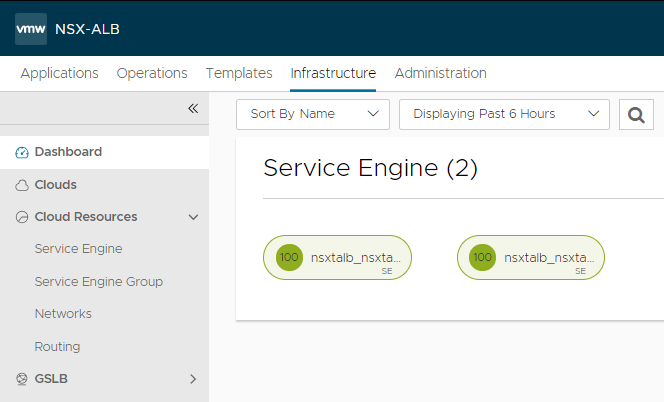

And that's it! You're up and running with Avi Vantage 21! After a few minutes, you should see deployed service engines:

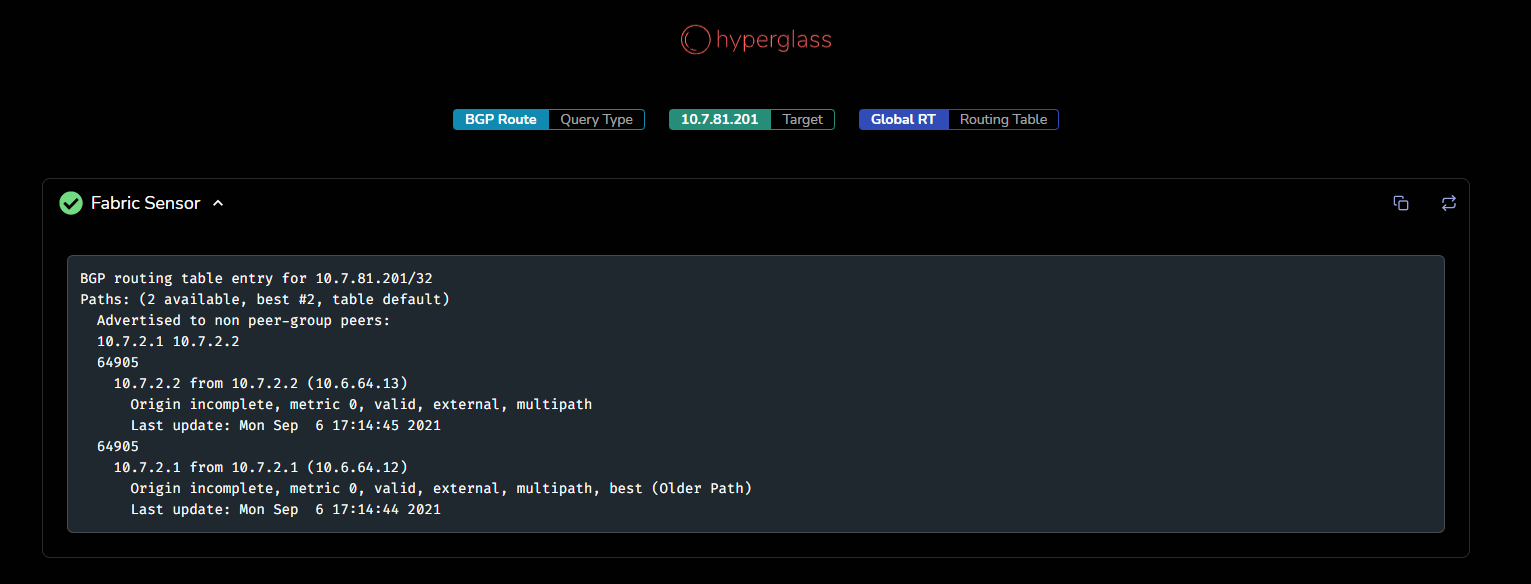

The service I configured is also now up - In this case, I'm using Hyperglass, and I can leverage the load-balanced vIP to check and see what the route advertisement from Avi looks like. As you can see, it's firing a multipath BGP host address: