Network Experiments with VMware NSX-T and Cisco Modeling Labs

Cisco Modeling Labs (CML) has turned out to be a great tool for deploying virtual network resources, but the "only Cisco VNFs" limitation is a bit much.

Let's use this opportunity to really take advantage of the capabilities that NSX-T has for virtual network labs!

Overview

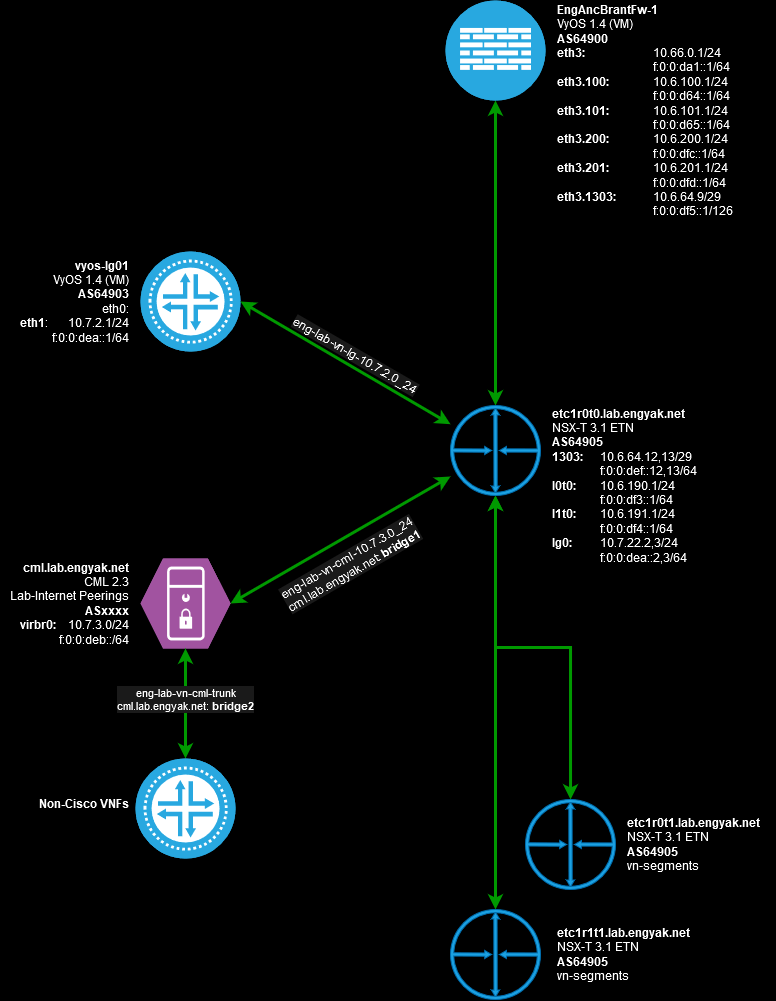

For the purpose of lab construction, I will use an existing Tier-0 router and uplinks to facilitate the "basics", e.g. internet connectivity && remote accessibility:

Constructing the NSX-T "Outside" Environment

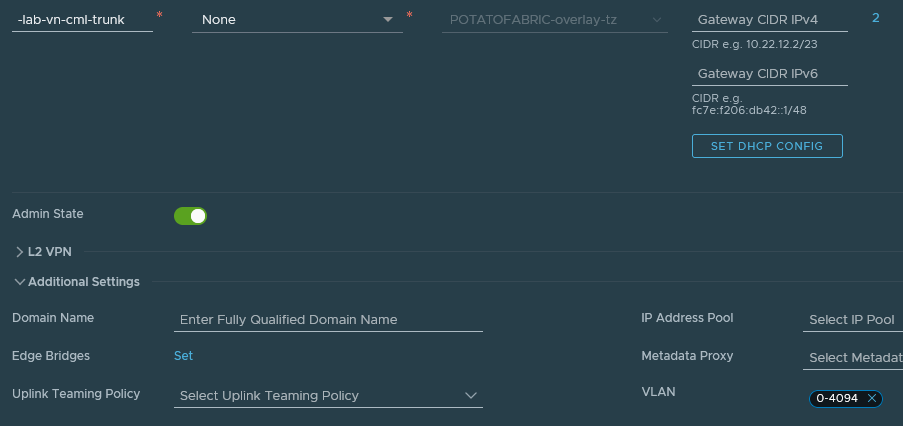

NSX-T has a few super-powers when it comes to constructing network topologies. We need one in particular to execute this - Q-in-VNI encapsulation - which is enabled on a per-segment basis. Compared to NSX-V, this is simple and straightforward - a user simply applies the allowed VLAN range to the vn-segment, in this case, _**eng-lab-vn-cml-trunk: **

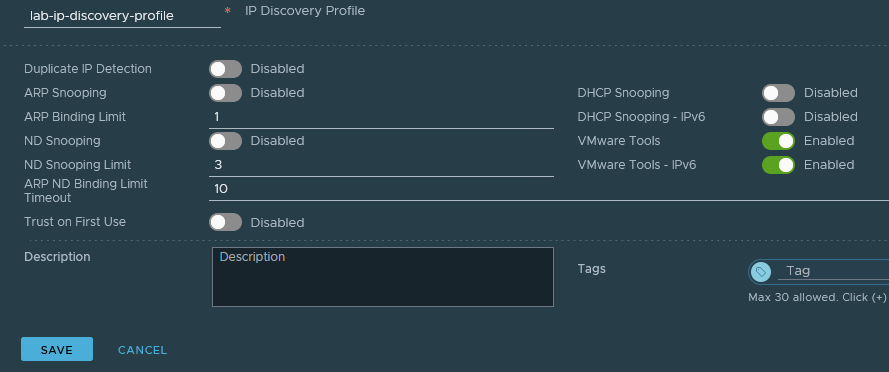

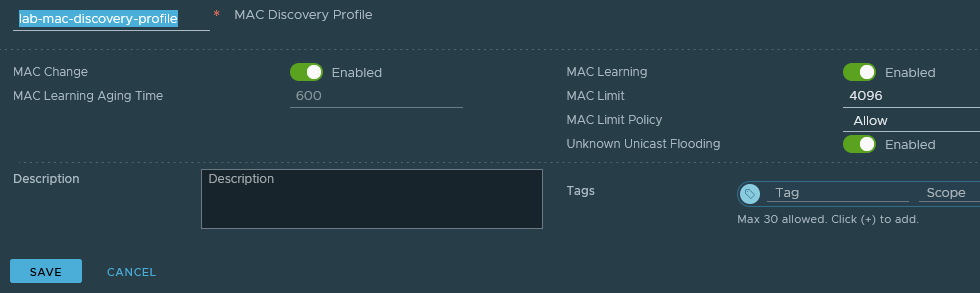

We'll need to disable some security features that protect the network by creating and applying the following segment profiles. The objective is to limit ARP snooping and force NSX to learn MAC addresses over the segment instead of from the controller.

I can't stress this enough, the Q-in-VNI feature gives us near unlimited power with other VFs, circumventing the 10 vNIC limit in vSphere and reducing the number of segments that must be deployed to what would normally be "physical pipes".

Here's what it looks like via the API:

Expose connectivity to CML

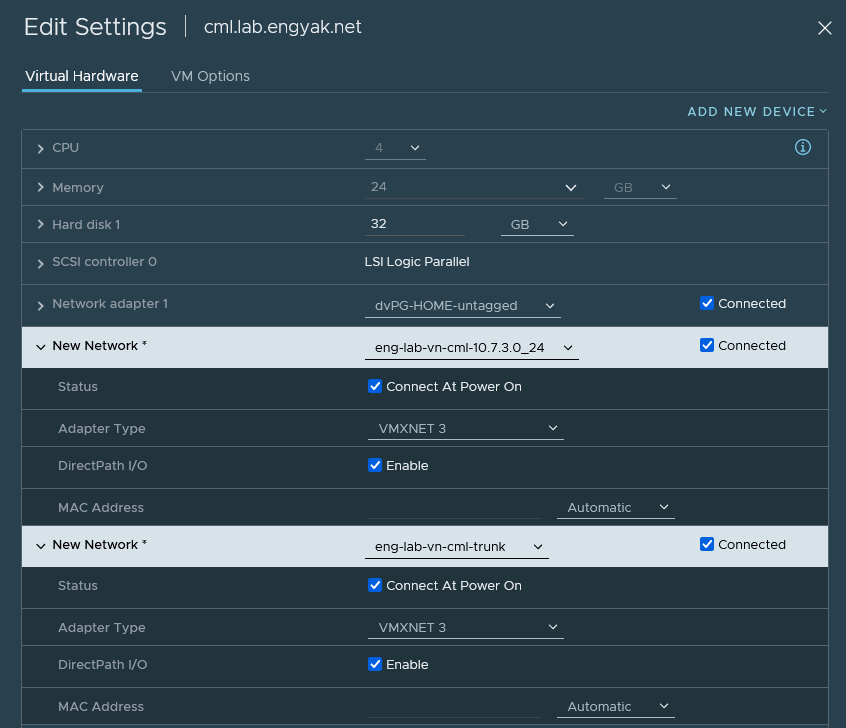

Generating the segments in NSX is complete, and now we need to get into the weeds a bit with CML. CML is, in essence, a Linux machine running containers, and has external interface support. Let's add the segments to the appropriate VM:

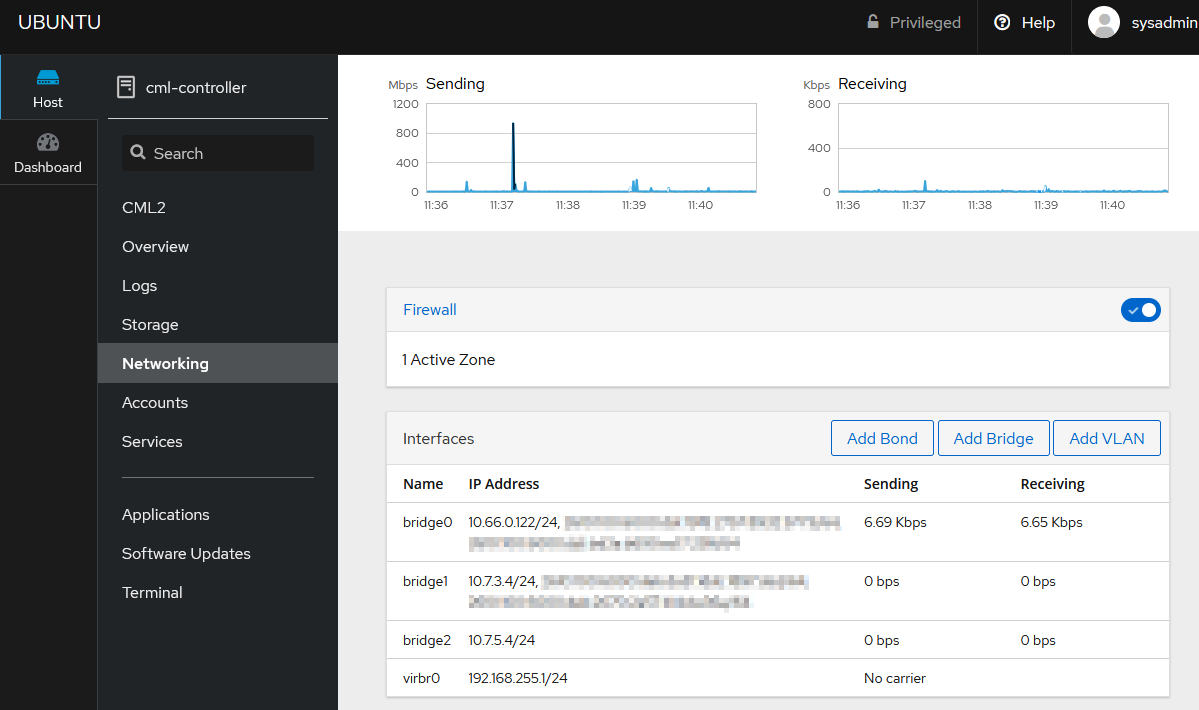

CML provides a Cockpit UI to make this easy, but the usual suspects exist to create a Linux bridge. We're going to create both segments as bridge adapters:

A word of warning - this is a Linux Software Bridge, and will copy everything flowing through the port. NSX helps by squelching a significant chunk of the "network storms" that result, but I would recommend not putting it on the same NSX manager or hosts as your production environments.

Leveraging CML connectivity in a Lab

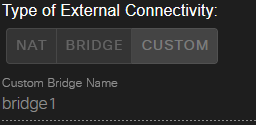

The hard part's done! To consume this new network, add a node of type External Connector, and configure it to leverage the bridge we just created:

The next step would be to deploy resources and configure them to connect to this central point - I'd recommend this approach to make nodes reachable via their management interface, i.e. deploy a management VRF and connect all nodes to it for stuff like Ansible testing. CML has an API, so the end goal here would be to spin up a test topology and test code against it, or to build a mini service provider.

Lessons Learned

This is a network lab, and we're taking some of the guardrails off when building something this free-form. NSX does allow an engineer to do so on a per-segment basis, and insulates the "outside" network from any weird stuff you intend to do. The inherent dangers to spamming BPDUs outwards from a host or packet flooding can be contained with the built-in feature "segment security profiles", indicating that VMware had clear intent to support similar use-cases in the future.

NSX also enables a few other functions if in routed mode with a Tier-1. It's trivial to set up NAT policies, redistribute routes as Tier-1 statics, to control export of any internal connectivity you may or may not want touching the "real network".

I do think that this combination is a really impressive one-two punch for supporting enterprise deployments. If you ask anyone but Cisco, a typical enterprise network environment will involve many different network vendors, and having the flexibility to mix and match in a "testing environment" is a luxury that most infrastructure engineers don't have, but should.

CML Scenario

Here's the CML scenario I deployed to test this functionality: