Scale datacenters past the number of VLAN IDs with NSX-T Tier-0 and Q-in-X

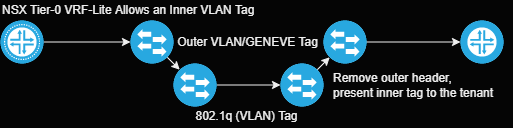

VMware introduced the ability to double-encapsulate layer 2 frames in via the "Access VLAN" option for VRF instances in NSX Data Center: https://docs.vmware.com/en/VMware-NSX-T-Data-Center/3.2/administration/GUID-4CB5796A-1CED-4F0E-ADE0-72BF7B3F762C.html

Q-in-VNI provides a capable infrastructure engineer the ability to to construct straightforward multitenant constructs. From the documentation and previous testing, we have demonstrated its capability outside of Layer 3 constructs. The objective of this post is to examine and test these capabilities with Tier-0 VRFs:

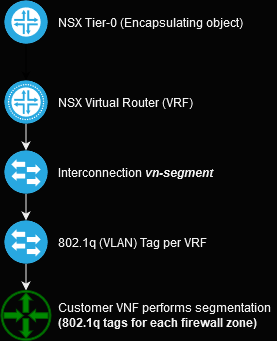

NSX Data Center provides the ability to pass a tag inside of a segment, which enables a few interesting design patterns:

- Layer 3 VPN to customer's campus, with each 802.1q tag delineating a separate "tenant", e.g. PCI/Non-PCI

- Inserting carrier workloads selectively to specific networks

- Customer empowerment - let's enable the customer to use their cloud how they please

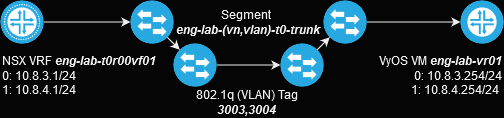

To validate this hypothesis, we will leverage the following isolated topology:

Note: VRF-Lite is required for this feature!

Q-in-VNI on NSX-T Routers

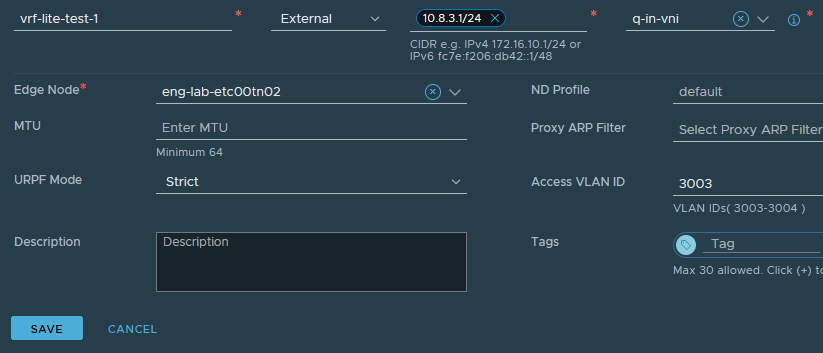

When configuring an interface on a VRF, the following option (Access VLAN ID) becomes available. Select the appropriate "inside" VLAN for each sub-interface:

We then configure the sub-interfaces - the tenant VM is unaware that it's being wrapped into an overlay:

Unsurprisingly, this feature just works. NSX-T is designed to provide a multi-tenant cloud-like environment, and VLAN caps are a huge problem in that space. In this example, we created 2 subinterfaces in the same VRF - normally tenants would not share a VLAN.

Q-in-VNI Design Patterns

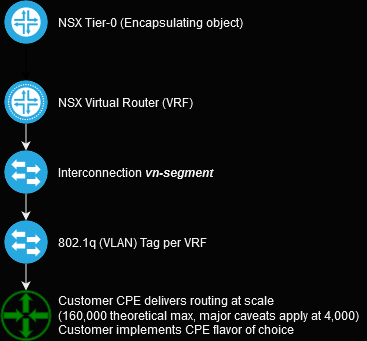

Offering Q-in-VNI on a Tier-0 solves valuable use cases for multi-tenant platform services. The primary focus of these solultions is customer empowerment - VMware isn't taking sides on matters of :"vi vs emacs", "Juniper vs Cisco", etc. Instead, we as CSPs can provide a few design patterns that enable a customer to leverage their own chosen methods, or even to allow an ISP to integrate crisply and effectively with their telecommunications services.

NSX-T has some fairly small scalability limits for CSPs leveraging the default recommended design pattern (160 standalone Tier-0s), and the ultimate best solution is to leverage multiple NSX Data Center instances to accommodate. If the desired number of tenants is above, say, twice that, the VRF-Lite feature allows an infrastructure engineer to deploy 100 routing tables per Tier-0.

VRF-Lite enables scaling to 4,000 Tier-1 gateways at this level, and a highly theoretical maximum of 160,000, but the primary advantage of this approach is that customers can bring their own networking easily and smoothly, front-ending NSX components with their preferred Network OS. Customers and Infrastructure engineers extend the feature set and reducing strain on NSX at the same time, creating a scenario where both the customer and the infrastructure benefit cooperatively.

Note: VMware's current configuration maximums are provided here: https://configmax.esp.vmware.com/guest?vmwareproduct=VMware%20NSX>

VRF-Lite can also be built to provide a solution where customers can "hair-pin" their tenant routing tables to a virtual firewall over the same VN-Segment. Enterprise teams leveraging NSX Data Center benefit the most from this approach, because common virtual firewall deployments are limited by the number of interfaces available on a VM. This design pattern empowers customers by permitting infrastructure engineers to construct thousands of macrosegmentation zones if desired.

Q-in-Q on NSX-T Routers

Time to test out the more complex option!

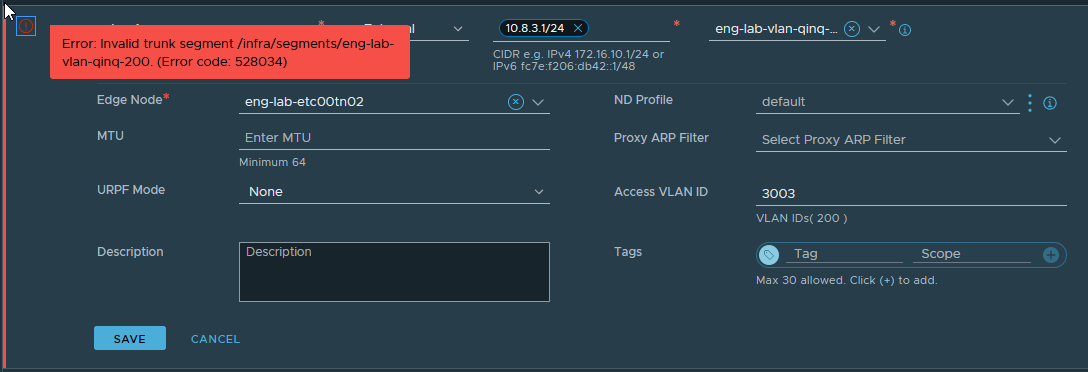

When I attempt to configure an internal tag with VRF-Lite subinterfaces, the following error is displayed:

Sadly, it appears that Q-in-Q is not supported yet, only Q-in-VNI. Perhaps this feature will be provided at a later date.

Here's the VyOS configuration to perform Q-in-Q:

Retrospective

- Learn, hypothesize, test is an important cycle for learning and design, and this is why we build home labs. NSX Data Center appeared to support Q-in-Q tagging - but the feature was ultimately for passing a trunk directly to a specific VLAN ID in a port-group.

- vSphere vDS does not appear to allow Q-in-Q to trunk outwards to other port-groups that do not support VLAN trunking, either.

- Make sure that MTU can hold inner and outer header without loss. I set the MTU to 1700, but you only need 16 bytes of extra MTU for the 802.1q header.