Different Methods to carry 802.1q tags with VMware vDS and NSX-T

VMware's vDS is a bit of a misnomer

In a previous post, I covered the concept of transitivity in networking - but in Layer 2 (Ethernet) land, transitivity is critically important to understanding how VMware's Virtual Distributed Switch (vDS) works.

The statement "VMware's Virtual Distributed Switch is not a switch" seems controversial, but let's take a moment to reflect - when you plug in the second uplink on an ESXi host, does the ESXi host participate in spanning tree?

Testing this concept at a basic level is straightforward. Enabling BPDU Guard on an ESXi host-facing port should take the host down immediately if it's actually a switch (it doesn't). This concept is actually quite useful to a capable infrastructure engineer.

A "Layer 2 Proxy"

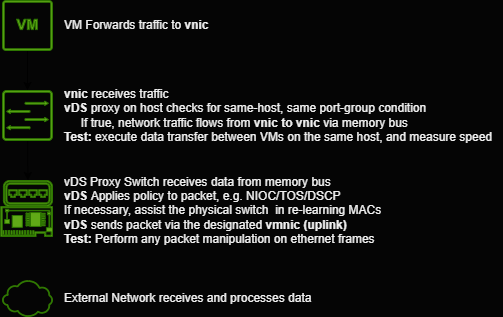

VMware's vDS is quite a bit more useful than a simple Layer 2 transitive network device - each ESXi host accepts data from a virtual machine, and then leverages a "host proxy switch" to take each packet and re-write its Layer 2 header in a 3-stage process:

Note: For a more detailed explanation of VMware's vDS architecture and how it's implemented, VMware's documentation is here.

Note: VMware's naming for network interfaces can be a little confusing, here's a cheat sheet:

- vnic: A workload's network adapter

- vmnic: A hypervisor's uplink

- vmknic: A hypervisor's Layer 3 adapter

A common misinterpretation of vDS is that the VLAN ID assigned to a virtual machine is some form of stored variable in vSphere - it isn't. vDS was designed with applying network policy in mind - and an 802.1q tag is simply another policy.

vDS is designed with tenancy considerations, so a port-group will not be allowed to transit traffic between different port-groups (but the same VLAN ID). Non-transitive behaviors achieve two goals at the same time - providing an infrastructure engineer total control of data egress on a vSphere host, and adequate segmentation to build a multi-tenant VMware Cloud.

Replacing the Layer 2 header on workload packets is extremely powerful - vDS essentially empowers an infrastructure engineer to write policy and change packet behavior. Here are some examples:

- For a VM's attached vnic, apply an 802.1q tag (or don't!)

- For a VM's attached vnic, limit traffic to 10 Megabits/s

- For a VM's attached vnic, attach a DSCP tag

- For a VM's attached vnic, deny promiscuous mode/MAC spoofing

- For a VM's attached vnic, prefer specific vmnics

- For a VM's attached vnic, export IPFix

NSX expands on this capability quite a bit by adding overlay network functions:

- For a VM's attached vnic, publish the MAC to the global controller table (if it isn't already there) and send the data over a GENEVE or VXLAN tunnel

- For a VM's attached vnic, only allow speakers with valid ARP and MAC entries (validated via VMware tools or Trust-on-First-Use) to speak on a given segment

- For a VM's attached vnic,send traffic to the appropriate distributed or service router

NSX also enables a few things for NFV that are incredibly useful, NFV service chaining and Q-in-VNI encapsulation.

Q-in-VNI encapsulation is pretty neat - it allows an "inside" Virtual Network Function (VNF) to have total autonomy with inner 802.1q tags, empowering an infrastructure engineer to create a topology (with segments) and deliver complete control to the consumer of that app. Here's an example packet running inside a Q-in-VNI enabled segment (howto is here).

NSX Data Center is not just for virtualizing the data center anymore. This capability, combined with the other precursors (generating network configurations with a CI tool, automatically deploying changes, virtualization), is the future of reliable enterprise networking.